Dunder Data Challenge #2 - Explain the 1,000x Speed Difference when taking the Mean

Welcome to the second edition of the Dunder Data Challenge series designed to help you learn python, data science, and machine learning. Begin working on any of the challenges directly in a Jupyter Notebook courtesy of Binder (mybinder.org).

In this challenge, your goal is to explain why taking the mean of the following DataFrame is more than 1,000x faster when setting the parameter numeric_only to True.

Learn Data Science with Python

I have several online and in-person courses available on dunderdata.com to teach you Python, data science, and machine learning.

Online Courses

- Master the Fundamentals of Python— A comprehensive introduction to Python (300+ pages, 150+ exercises)

- Master Data Analysis with Python — The most comprehensive course available to learn pandas. (800+ pages and 500+ exercises)

- Master Machine Learning with Python — A deep dive into doing machine learning with scikit-learn constantly updated to showcase the latest and greatest tools. (300+ pages)

- All Access Pass! — Get lifetime access to all current and future online courses for one low price!

In-Person Courses

- Take a hands-on, interactive, in-person class with me

Social Media

I frequently post my python data science thoughts on social media. Follow me!

Corporate Training

If you have a group at your company looking to learn directly from an expert who understands how to teach and motivate students, let me know by filling out the form on this page.

Video Available!

A video tutorial of me completing this challenge is available on YouTube.

The Challenge

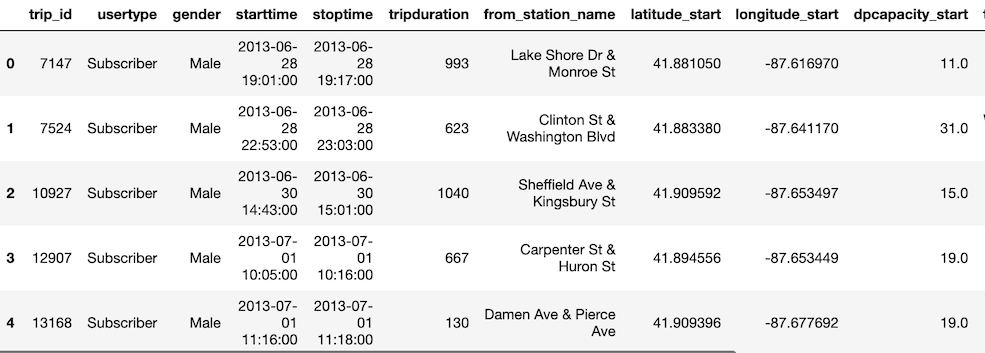

The bikes dataset below has about 50,000 rows. Calling the mean method on the entire DataFrame returns the mean of all the numeric columns. If we set the parameter numeric_only to True, the exact same result is returned. But, using the second option results in a speed difference of more than 1,000 times, reducing the completion time from over 40 seconds down to around 15 milliseconds.

The challenge is to explain why this speed difference exists despite each of these operations returning the exact same result. The solution is fairly nuanced and requires a deep understanding of pandas.

>>> import pandas as pd

>>> bikes = pd.read_csv('../data/bikes.csv')

>>> bikes.head()

>>> bikes.shape

(50089, 19)

Taking the mean

Calling the mean method with the defaults is extremely slow.

>>> bikes.mean()

trip_id 9.472308e+06

tripduration 7.168678e+02

latitude_start 4.190001e+01

longitude_start -8.764464e+01

dpcapacity_start 2.134022e+01

latitude_end 4.190058e+01

longitude_end -8.764485e+01

dpcapacity_end 2.124171e+01

temperature 6.260824e+01

visibility 8.148827e+00

wind_speed 7.070111e+00

precipitation -9.239627e+03

dtype: float64

Setting the parameter numeric_only to True makes a huge difference even though the returned result is the same.

>>> bikes.mean(numeric_only=True)

trip_id 9.472308e+06

tripduration 7.168678e+02

latitude_start 4.190001e+01

longitude_start -8.764464e+01

dpcapacity_start 2.134022e+01

latitude_end 4.190058e+01

longitude_end -8.764485e+01

dpcapacity_end 2.124171e+01

temperature 6.260824e+01

visibility 8.148827e+00

wind_speed 7.070111e+00

precipitation -9.239627e+03

dtype: float64

Timing each operation

There is over 1,000x difference in performance — from 40 seconds to 15 ms

>>> %timeit -n 1 -r 1 bikes.mean()

37.6 s ± 0 ns per loop (mean ± std. dev. of 1 run, 1 loop each)

>>> %timeit -n 1 -r 1 bikes.mean(numeric_only=True)

19 ms ± 0 ns per loop (mean ± std. dev. of 1 run, 1 loop each)

Solution

The solution relies on a thorough understanding of the object data type. DataFrame columns that are of the object data type may contain any Python object. Object columns may be composed of integers, floats, strings, lists, dictionaries, other DataFrames, or any other object. Typically, columns with the object data type contain only strings, but this isn’t guaranteed. The object data type is the most flexible and it is this flexibility that causes the tremendous slowdown above.

Integers as objects

Let’s create a Series of integers and calculate some summary statistics on it. Note that the data type is formally a 64-bit integer after creation.

>>> s_int = pd.Series([10, 99, -123, 88])

>>> s_int

0 10

1 99

2 -123

3 88

dtype: int64

Verify the data type.

>>> s_int.dtype

>>> dtype('int64')

Let’s calculate the sum and mean for the Series with integer data type.

>>> s_int.sum()

74>>> s_int.mean()

18.5

Within pandas, the astype method may be used to change the data type of a Series. Let's change this Series so that its data type is object.

>>> s_obj = s_int.astype('object')

>>> s_obj0 10

1 99

2 -123

3 88

dtype: object

Both the sum and mean method work for columns with object data type and return the same results from above.

>>> s_obj.sum()

74

>>> s_obj.mean()

18.5

If you are enjoying this article, consider purchasing the All Access Pass! which includes all my current and future material for one low price.

Not a good idea

Typically, you would never want to convert a Series of integers to object as you would ruin the optimizations granted to you through the numpy library. A Series that has a data type of ‘int64’ has its data stored internally as a numpy array which stores its data directly in contiguously allocated memory using a C integer array. By converting a Series to the object data type, each integer is no longer stored as a C integer but as a Python integer object (a much bulkier object). Let’s verify this by retrieving the type of an individual value in each numpy array.

>>> type(s_int.values[0])

numpy.int64>>> type(s_obj.values[0])

int

Operations on object arrays are slow

Changing the data type of a column of integers to object will have no impact on the result for several methods, but performance will decline enormously. Below, a numpy array of 1 million integers is created. It is then summed as both an integer data type and as an object with the object being 60x slower.

>>> import numpy as np

>>> a_int = np.random.randint(0, 10, 1000000)

>>> a_obj = a_int.astype('object')>>> a_int

array([3, 4, 8, ..., 8, 3, 2])>>> a_obj

array([3, 4, 8, ..., 8, 3, 2], dtype=object)>>> %timeit -n 5 a_int.sum()

523 µs ± 76.3 µs per loop (mean ± std. dev. of 7 runs, 5 loops each)>>> %timeit -n 5 a_obj.sum()

30.4 ms ± 920 µs per loop (mean ± std. dev. of 7 runs, 5 loops each)

Strings can be added together

One interesting property of strings in Python is that they can be concatenated together with the plus operator.

>>> string1 = 'mac'

>>> string2 = 'hine'

>>> string1 + string2

'machine'

String Series

Whenever you have a column of data in pandas that contains strings, its data type will always be object. There is no specific string data type in pandas. Let’s create a Series of strings and verify that its data type is indeed object.

>>> s = pd.Series(['The', 'quick', 'brown', 'fox'])

>>> s

0 The

1 quick

2 brown

3 fox

dtype: object

Summing a Series of strings

The sum Series method simply adds together every value in the Series. Because addition is a valid operation with strings in Python, the method completes on our current Series.

>>> s.sum()

'Thequickbrownfox'

Taking the mean of a string Series

Taking the mean of a Series of strings is meaningless and pandas will raise an error. Let's attempt this and make a note of the error message.

>>> s.mean()

...

TypeError: Could not convert Thequickbrownfox to numeric

Summing and then dividing

The error message reads ‘Could not convert Thequickbrownfox to numeric’. This implies that pandas has taken the time to compute the sum first before trying to divide by the total length of the Series.

Why is there no error with the bikes DataFrame?

You might be wondering why our bikes DataFrame did not raise an error when taking the mean, but the above Series did. DataFrames have a concept called nuisance columns, which are columns where a calculation is unable to be computed. These nuisance columns are silently (without an error or warning) dropped from the result. Only columns where the operation is successful are returned. Taking the mean of a DataFrame with columns that don’t have a mean is valid.

For instance, we can turn our string Series into a one-column DataFrame with the to_frame method and then compute the mean. Notice that there is no error here as there was above when computed on a Series with the same data. Instead, an empty Series is returned as the one column in the DataFrame is a nuisance column.

>>> df = s.to_frame('Words')

>>> df.head()

>>> df.mean()

Series([], dtype: float64)

Explaining what happens during the challenge problem

When taking the mean of the bikes DataFrame above, pandas first sums every single column regardless of its data type. Once the sum is complete, then it divides by the number of rows to get the mean of that column. For columns of strings, it is only at this stage where the division happens that pandas is unable to compute a mean and declares it a nuisance column.

Concatenating strings is extraordinarily more expensive than adding integers or floats and since every single value in a string column is first concatenated together with the call to mean explains why the operation is so terribly slow. There are 50,000 rows in the bikes DataFrame resulting in 50,000 string concatenations before the division occurs.

Setting the numeric_only parameter to True informs pandas to not even attempt to sum the object data type columns, which is why we see the huge gap in performance when it is used even though the result is the same.

Why can’t pandas skip columns of strings?

It does seem that the logical thing to do is for pandas to skip columns where the mean is not a valid option such as columns with strings in them. Since object columns can contain any Python object, it could be possible that the mean is a valid operation as we saw in our first Series from above.

pandas does not make any assumptions about the data contained in the object column. It just follows its procedure for calculating the mean, which is summing the column and then dividing by the length. If at any point an error occurs, the column is declared a nuisance and dropped from the result.

Can’t pandas build a special case for this?

Yes, it would still be possible for pandas to inspect values when taking the mean of an object column and if it is a data type that does not have a mean raise the error immediately at that point.

An actual string data type

A better solution for pandas would be to have a data type just for strings. It’s quite unfortunate that we are stuck with the infinitely flexible object data type which can never guarantee the data type of an individual value. Many errors could be avoided and performance increased (as in this challenge) if there were a string data type.

Master Python, Data Science and Machine Learning

Immerse yourself in my comprehensive path for mastering data science and machine learning with Python. Purchase the All Access Pass to get lifetime access to all current and future courses. Some of the courses it contains:

- Master the Fundamentals of Python— A comprehensive introduction to Python (300+ pages, 150+ exercises)

- Master Data Analysis with Python — The most comprehensive course available to learn pandas. (800+ pages and 500+ exercises)

- Master Machine Learning with Python — A deep dive into doing machine learning with scikit-learn constantly updated to showcase the latest and greatest tools. (300+ pages)